Meta-evaluation and evaluation standards in social policy

15/09/21: By Rahel Kahlert, European Centre

Meta-evaluation is an “evaluation of evaluations” to improve future evaluation work. As a highly relevant topic for professionals working in social policy, the third module of the virtual Bridge-Building Summer School of Evaluation in Social Policies (25-27 August 2021) was about meta-evaluation and evaluation standards in social policy. This module was one of eight modules covered by the Summer School, which combined capacity building by enhancing knowledge and information sharing on the state-of the-art research and policy practices, exchanging shared experiences among participants, and developing ideas and proposals for “real-world” projects. “Real world” refers to the idea that evaluations take place under budget, time, data, and political constraints (see Bamberger & Mabry, 2019). One central focus was the interactive, hands-on small-group work with practical exercises to foster peer learning. Eye level interactions were core building blocks. The thirteen participants shared their expertise in either conducting or commissioning evaluations. The participants were social policy professionals from both the public and private sector in the Western Balkan region and Eastern Partnership region (in particular, Azerbaijan, Albania, Bosnia and Herzegovina, Kosovo, Moldova, North Macedonia, and Ukraine).

The module on meta-evaluation and standards started with a short introduction about meta-evaluation by guest speaker Thomas Vogel, head of programmes at Horizon 3000, the largest Austrian non-governmental development cooperation organisation, where evaluation plays a key role for learning and accountability. Mr. Vogel introduced the difference between evaluation synthesis and meta-evaluation. Evaluation synthesis analyses a series of evaluation reports and aggregates findings on a higher level such as programme, country, or sector level. The aim is to find out how programmes or projects can be improved. Meta-evaluation also analyses a series of evaluation reports, but then analyses the quality of each evaluation. The aim is to find out how evaluations can be improved in the future. Meta-evaluation can enhance the quality in evaluation by using and applying evaluation standards for all levels of quality including scope, methodology, independence of evaluators, utility etc.

Rahel Kahlert then provided a brief input on widely used standards in evaluation such as the UNEG Norms and Standards (updated in 2016) to be upheld in the conduct of any evaluation. Professional evaluation associations such as the German Evaluation Society or the Swiss Evaluation Society developed their own standards for evaluators to apply in their daily professional work. Common features include accuracy (methodological and technical standards), propriety (legal and ethical standards), utility (serving information needs of intended users), and feasibility (realistic, cost-effective evaluation). As evaluators, we have to remind ourselves that we are dealing with persons and intervene in a setting. Therefore, it is important to follow ethical guidelines and protect human participants similar to any research setting.

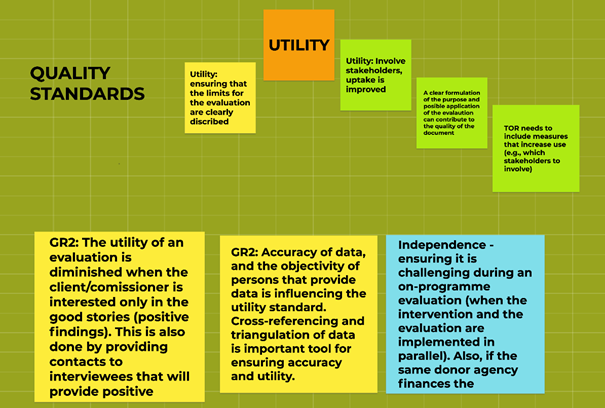

The small-group breakout sessions dealt with the question: “How can standards enhance the quality of an evaluation?” The teams of 4 to 5 persons selected two to three evaluation standards and then applied them to a “real-world” setting. The teams used the online pad tool Google JamBoard (see Figure 1) to make their case by adding post-its. For all three teams, utility stood out as important evaluation standard. Involving stakeholders was regarded as a means of enhancing utility, i.e. improving the uptake of findings. This may sometimes conflict with the standard of independence, when donors or programme staff would like to influence evaluation findings (“interested only in the good stories (positive findings)”). Accuracy can be enhanced through triangulation and cross-referencing data, which ultimately also can improve utility and uptake.

Summarily, the module on meta-evaluation and standards was a lively, interactive experience with all participants engaged, and set the scene for further productive interactions. At the end of the Summer School, participants expressed their intention that they will apply evaluation standards in their evaluation work more strongly and engage in meta-evaluation in the future to ensure evaluation quality.